By Ping Yeung Ph.D., Principal Engineer, Siemens EDA

Many companies have used formal verification to verify complex SoCs [1][2] and safety-critical designs. Using formal verification to confirm design functionalities and uncover functional bugs is emerging as an efficient verification approach. Although formal verification will not handle the complexity of a design at the SoC level, it is an efficient tool to verify the design blocks or IP comprehensively.

Unfortunately, the formal coverage closure process is not well defined. Different companies or even different project teams within a company may use different criteria. Experienced formal verification users know how to refine the results of formal verification intuitively. However, new users have found this skill challenging to master, leading to frustration and disappointment. In this article, we capture the refinement process in a step-by-step methodology and formulate it graphically so that it is easy to understand and replicate.

Success factors of formal verification

It is essential to understand the factors that determine the success of formal verification on a project. Various formal teams from multiple companies have documented the following six factors for success based on their experiences.

- The complexity of the design [5]

- The quality of the sub-goals and target assertions [4]

- The completeness of the interface constraints [3]

- The control and orchestration of the formal engines [5]

- The quality of the initial states for formal exploration [6]

- The formal expertise of the users [2]

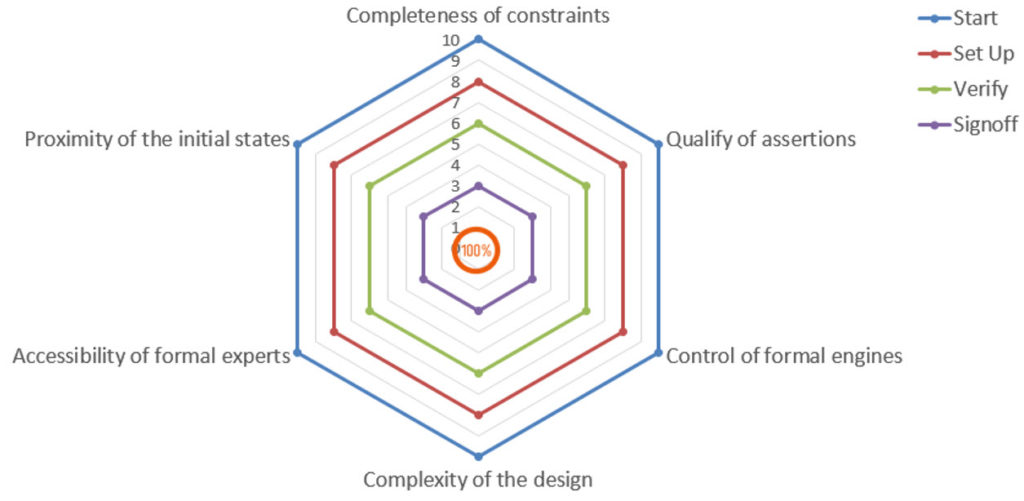

If we want to be successful in signing off a design with formal coverage, we will inevitably need to wrestle with all of the success factors mentioned above. To help us manage them and (maybe more importantly) allow managers to visualize them, we introduced the “spiral refinement” bug hunt radar (Figure 1). It captures the six factors in the axis of the radar chart. The objective is to identify the primary obstacle(s), such as the availability of formal expertise or completeness of interface constraints. Then we can gradually improve or refine the other factors to achieve formal coverage closure.

The “Spiral Refinement” formal coverage closure methodology

The formal coverage closure process consists of multiple phases and iterations. To simplify the explanation, we classified the process into three phases, as depicted in figure 1.

Phase 0 – Start: the original RTL design

Phase 1 – Set-Up: formal verification testplan, configuration, and abstraction

Phase 2 – Verify: formal proofs, bug exploring, and refinement

Phase 3 – Signoff: formal coverage closure

The goal of the setup phase is to gather all necessary information, create a formal verification test plan [9], and set up the formal verification environment. During this phase, we extract the targeted design functionalities from the design specification document. To form a formal verification test plan, we define the configurations and operating environment and capture a comprehensive list of requirements. Once the test plan is finalized, we begin writing properties to constrain the environment and capture the defined requirements. The initial stages of assertion development focus on refining the assertions and constraints to match the requirements. The users’ design knowledge, language proficiency, and formal experience will determine the efficiency of this phase.

The goal of phase 2 is to verify the properties in the design and fix any bugs found by formal verification. This is a refinement process that will require continuously improving each of the success factors to verify as many properties as possible. CPU resource is critical for this phase. Most design companies already have a server farm environment setup for functional simulation regression. We can establish a multi-processing environment by constraining the design based on the modes of operations, abstractions, and interfaces. We can configure the formal tool to dispatch hundreds of small jobs with different formal engines and techniques on the server farm environment. To help formally verify some complex assertions, we should be prepared to refine them continuously. Techniques [4] such as decomposition, helper or subgoal assertions, and assume-guarantee are commonly used. As explained in [6], if a design requires a special initialization sequence to set up the design correctly, it is better to start formal verification after the setup has been completed.

The goal of the signoff phase is to confirm that sufficient verification has been done, and we can sign off the block with formal verification. As the verification progresses, a stage is reached in which most of the targeted scenarios are verified. Assertions are proven or fired as anticipated (i.e., negative scenarios), while a few assertions may still be inconclusive. At this stage, for effective formal verification signoff, we face these critical questions:

- Are there sufficient assertions in place to cover all functionality of the design?

- Are there any gaps in assertion coverage concerning the targeted functionalities?

- Are the depths of inconclusive assertions sufficient to sign off the functionality?

We turn to formal coverage to address these questions. Different types of formal coverage are computed: reachability coverage, observability coverage, proof core coverage, and bounded proof coverage. These help us understand the quality of the assertions in the design as well as the actual elements that have been formally verified. At the same time, they identify unreachable elements in the design and potential over-constraining during formal verification.

Spiraling in on good results

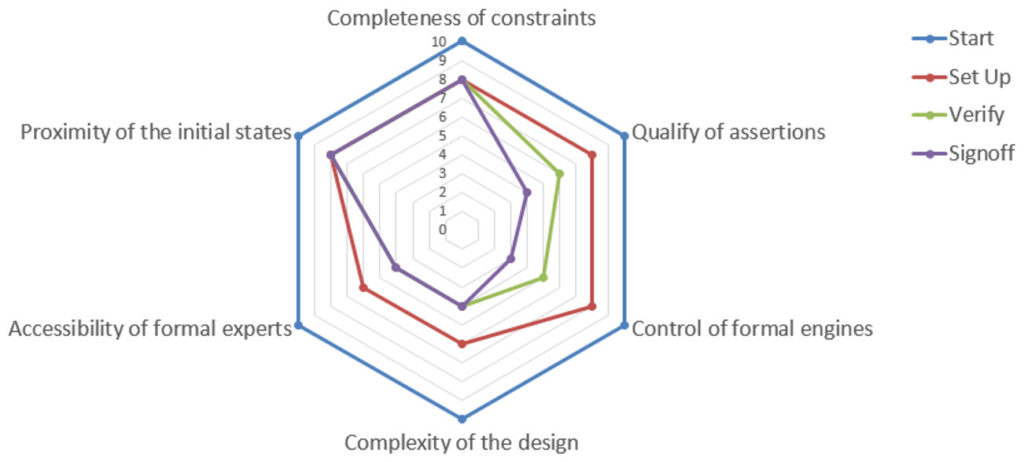

We will use an example design project to see how this works: a packet scheduler that prioritizes and transfers incoming packets based on a pre-programmed arbitration scheme. Figure 2 depicts how well we were able to apply the six factors for success in each of the three phases of our spiral refinement methodology for formal coverage closure.

Phase 1: Set Up

- Complexity of the design: the depth and breadth of the design made it inefficient for formal verification to handle directly. To improve efficiency, we reduced the number and sizes of channels, the packet length, and the amount of buffering for outstanding transactions. This was not difficult as the design is very configurable. The design team helped us generate multiple simple-and-true representations.

- Accessibility of formal experts: in-house formal experts were involved early to help with the design abstraction process. We had recognized their SVA knowledge and formal coding technique as one of the determinate factors for the project’s success.

- Control of formal engines: memories and unessential parts of the design had turned into black boxes. Preliminary formal runs and reachability analysis were done to confirm that the formal engines have adequate control of the design.

- Quality of the assertions: formal experts worked closely with designers to create the properties for the test plan and analyze potential failure scenarios at critical parts of the design. We developed complex assertions to check for the packets’ data integrity and credit-based scheduling schemes. Then observability coverage was used to measure the assertion density to ensure sufficient coverage of the critical functionalities.

- Proximity of the initial states: we used a known-good sequence to initialize the design. As shown in figure 2, it was kept constant for all phases.

- Completeness of the constraints: external and internal formal assertion IP had been acquired for the packet transfer protocol, AXI bus interfaces, configuration, and memory interfaces. They were kept constant for all phases.

Phase 2: Verify

- Complexity of the design: we reduced the design complexity further by customizing the design abstraction with regard to the focus of the formal run. This approach allowed us to launch more efficient formal runs concurrently.

- Accessibility of formal experts: formal experts and tool vendors helped partition and launch the formal runs onto multiple cores in the server farm environment.

- Control of formal engines: we ran formal verification using all formal engines to look for proofs and violations. We tried to be as efficient as possible by leveraging the four levels of multi-processing.

- Quality of the assertions: the tool provided feedback on the formal runs [5] by showing the engine health dynamically and the active logic being analyzed by formal verification. We decomposed complex assertions and added helper assertions to gain a better understanding of the formal progress. These assertions helped guide the formal process once they were proven. We continued to refine the assertions with data independence and non-determinism. These further improved the design’s controllability and formal efficiency.

- Proximity of the initial states: same a phase 1

- Completeness of the constraints: same a phase 1

Phase 3: Signoff

After reviewing the proven properties and debugging the violations in phase 2, we focused on the inconclusive properties and formal coverage in this final phase. It is part of the formal coverage closure methodology.

- Complexity of the design: we used the same design abstractions as in phase 2

- Accessibility of formal experts: same as in phase 2

- Control of formal engines: we changed the formal orchestration to perform observability and reachability analyses. The focus was to measure formal coverage on the proof and bounded proof assertions. We leveraged the same multi-processing environment that we had set up in phase 2.

- Quality of the assertions: Formal coverage was computed with various methods depending on the focus and the accuracy requirements. We focused on observability coverage to ensure there were sufficient assertions and the bounded proofs were deep enough to cover the intended properties.

- Proximity of the initial states: same a phase 1

- Completeness of the constraints: same a phase 1; but we also ran reachability analysis to confirm that we had not over-constrained the design.

Summary

Multiple companies have demonstrated that formal verification is a viable alternative for block-level design signoff [1] [2] and silicon bug hunts [3] [7]. The “spiral refinement” radar and methodology provide the necessary guidance for project teams to adopt this interactive process. It captures the success factors and helps guide the deployment of various approaches. The three phases — set up, verify, and signoff — help project teams establish a flow, schedule tasks, and set up reviews if required to move from one phase to another. The process is to identify any obstacle then gradually improve or refine each of the success factors so that formal verification can be transited from phase to phase. The overall goal is to validate the design’s functionalities and confirm the completion of formal coverage closure.

References

[1] Ram Narayan, “The future of formal model checking is NOW!”, DVCon 2014.[2] M Achutha KiranKumar et al., “Making Formal Property Verification Mainstream: An Intel® Graphics Experience,” DVCon India 2017

[3] Mark Eslinger, et al., “Every Cloud – Post-Silicon Bug Spurs Formal Verification Adoption,” DVCon 2015

[4] Jin Hou et al., “Handling Inconclusive Assertions in Formal Verification,” DVCon China 2018

[5] Jeremy Levitt et al., “It’s Been 24 Hours – Should I Kill My Formal Run?”, Workshop, DVCon 2019

[6] Ping Yeung et al., “Formal Bug Hunting with “River Fishing” Techniques,” DVCon 2019

[7] Ping Yeung et al., “Formal Verification Experiences: Spiral Refinement Methodology for Silicon Bug Hunt”, DVCon 2021

[8] Abhishek Anand et al., “Bounded Proof Sign-off with Formal Coverage,” DVCon 2021

[9] Harry Foster et al., “Guidelines for creating a formal verification testplan”, DVCon 2006