The advent of autonomous vehicle technology is causing the automotive industry to undergo radical change. Every tech business seems to have joined the race to build the first fully autonomous vehicle (AV). However, recent crashes from powerhouses like Uber and Tesla are causing some to question the proliferation of self-driving cars: How long will it take to truly achieve level-5 autonomy?

Despite these prescient doubts, experts affirm the industry’s future is inevitably autonomous, and it is just a matter of time before all cars become self-driving. Alex Rodrigues, CEO from Embark, says autonomous vehicles are “the only plausible strategy that we have as a society to get to zero [fatalities].” New research from the University of Michigan suggests it takes just one AV on the road to avoid “phantom traffic jams” and make conditions safer for all surrounding drivers.

Recent incidents with AVs spurred more research about how teaching autonomous vehicles to drive in the dark. According to the Insurance Institute for Highway Safety, pedestrian fatalities are rising fastest during hours when the sun is down. More than three-quarters of pedestrian deaths happen at night. Night driving poses the same challenges for autonomous cars that it does for human drivers. There is an obvious solution to this—thermal sensors.

Flawed Sensors Are the Chief Obstacles to Full Autonomy

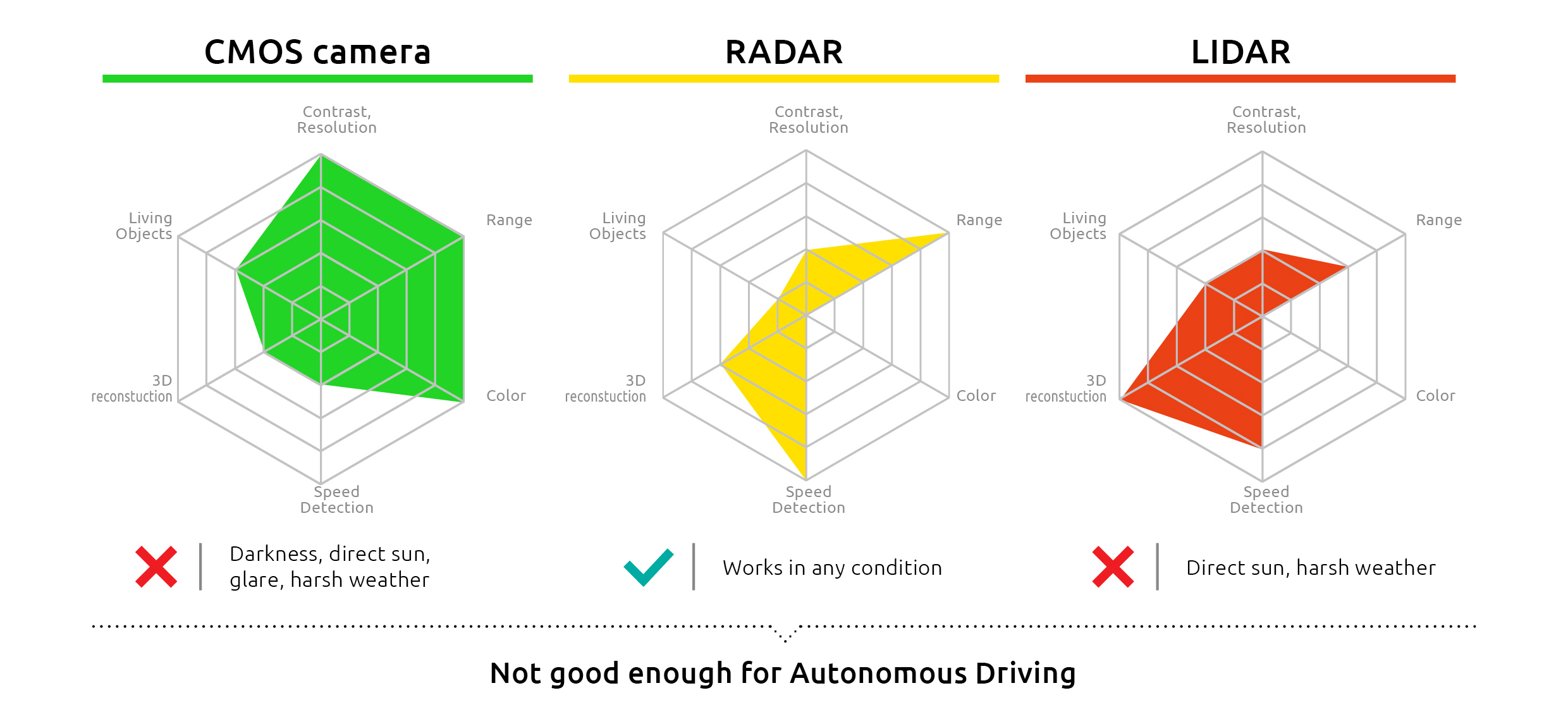

The reason fully autonomous vehicles have yet to take over roadways is because they lack sufficient sensing technologies to give them sight and perception in every scenario. The current sensors employed by many OEMs, such as lidar, radar, and cameras, each have perception problems that require a human driver to be ready to take control of the car at any moment.

Radar, for example, can sufficiently detect, but not clearly identify long-range objects. Cameras are much better able to identify objects, but can only do so at close-range. Therefore, many automakers are combining their radar and camera efforts to provide more complete detection and coverage of vehicles’ surroundings: A radar sensor detects an object far down the road. As it approaches, a camera provides a clearer picture.

Lidar (light detection and ranging) sensors are another key perception solution for most AVs, but there are situations when they don’t work. Like radar, lidar works by emitting signals and using their reflections to measure an object’s distance (Radar uses radio signals; lidar, lasers or light waves.). Lidar sensors can provide a wider field-of-view than radar, but the solution remains cost-prohibitive for mass-market applications. Although several companies are attempting to produce lower-cost lidar sensors, these lower-resolution sensors cannot provide the detection and coverage needed to reach level-five autonomy.

Other Obstacles to Full Autonomy

To compensate for each sensing technology’s respective weakness, automakers often outfit their AVs with several different solutions in a redundant mosaic of sensors, the idea being where one sensor falls short, the other(s) can pick up the slack. Even when these technologies can accurately detect an autonomous vehicle’s surroundings, they can still fall victim to “false positives.”

A false positive is when an autonomous vehicle successfully detects an object, but wrongfully determines no evasive action is required. Experts are now calling the infamous Uber tragedy from March of this year (in which a self-driving Uber struck and killed a pedestrian who was crossing the street with a bicycle) a false positive. The vehicle’s sensors detected the victim, but its software wrongly determined she wasn’t in danger, and no action was required. In fact, National Transportation Safety Board recently released a report saying the vehicle detected the pedestrian six seconds before the accident, but the autonomous driving system software classified the pedestrian as an unidentified object, initially as a car, and then as a bicycle.

Autonomous vehicles’ software is designed to purposefully ignore certain objects like an errant plastic bag drifting across the street, but Uber’s fatal incident proves building a machine vision algorithm that can intelligently consider which detected objects are at danger from, or are a danger to the vehicle is a challenge—and one of dire importance. Until false positives can be properly identified, autonomous vehicles cannot achieve safe, level-five autonomy.

Another problem that confounds AVs is rural navigation. Unlike urban environments with three-dimensional, detailed maps to guide autonomous vehicles and tell them exactly where and what their surroundings are, map-less rural environments leave AVs to essentially rely on GPS for autonomous navigation. While automakers are most vocal in their plans to deploy self-driving cars in cities as next-generation taxis, rural environments are equally in need of autonomous vehicles and should not be neglected by developers.

Proposed (but Inadequate) Solutions

To overcome the difficulties of rural navigation and deliver fully autonomous vehicles to every environment, some automakers are attempting to design AVs that can drive without a detailed map. They aim to use lidar sensors for detecting textural differences in the rural landscape, using the light waves’ reflections to tell the car where the flat road ends and where the organic grass begins.

To stay on track without a map’s help, the AV will pick a “local navigational goal”—a point on the road within the current view of the car. The lidar sensors will detect the car’s surroundings and generate a path to that local goal, which is constantly updated as the car moves forward.

Lidar has already been identified as an inadequate sensing solution. High-resolution lidar sensors are too expensive for mass-market use, and less expensive, lower-resolution lidar sensors cannot effectively detect far away obstacles. Thus, they are largely incapable of providing accurate detection and coverage.

FIR Technology Is the Only Solution

A new type of sensor using far infrared (FIR) technology can provide the complete and reliable coverage needed to make AVs safe and functional in any environment.

Unlike radar and lidar sensors that must transmit and receive signals, an FIR camera simply senses signals from objects radiating heat, making it a “passive” technology. Because it scans the infrared spectrum just above visible light, FIR cameras generate a new layer of information, detecting objects that may not otherwise be perceptible to a camera, radar, or lidar. Besides an object’s temperature, an FIR camera also captures an object’s emissivity—how effectively it emits heat. Since every object has a different emissivity, this allows an FIR camera to sense any object in its path.

With this information, an FIR camera can create a visual painting of the roadway, both at near and far range. Thermal FIR also detects lane markings and the positions of pedestrians (e.g. which direction they’re facing) and can, in most cases, determine if a pedestrian is going off the sidewalk and is about to cross the road. The vehicle can then predict if it is at risk of hitting the pedestrian, thus, helping to avoid the challenge of false positives and enabling AVs to operate independently and safely in any kind of environment, whether it be urban or rural.

FIR has been used for decades in defense, security, firefighting, and construction, making it a mature and proven technology.

The Future of Autonomous Vehicle Technology

The future mass market deployment of fully autonomous vehicles is reliant upon FIR technology. Major automotive OEM, BMW, is using thermal-imaging cameras as part of their sensor suites for all self-driving prototypes, and many automakers favor using multiple FIR sensors for the highest level of safety.

To achieve full autonomy for the mass market, autonomous vehicle technology has many obstacles to overcome, none of which can be beaten without employing FIR technology.