Introduction

This is the concluding instalment of an expanded version of an article that originally appeared in the September 2017 print edition of PD&D. It explores how advances in artificial intelligence (AI), advanced computing architectures, and low-cost, high-performance sensors are enabling the development of a growing number of commercial applications for autonomous vehicles, drones, robots and other so-called “autonomous edge devices”.

Part 1 provided a brief introduction to AI/DL systems, and how they differ from conventional embedded systems. This installment looks at how the technology is being applied to applications like sensor fusion, autonomous vehicles, and security systems.

Part 2: AI Applications

Autonomous Vehicles Drive Sensor Fusion

The autonomous ground-based and aerial vehicles, expected to dominate transportation over the next ten years, continue to be the earliest and largest markets for GPUs and AI technologies. All major automobile manufacturers are racing to incorporate AI and deep learning (DL) into their cars, with plans to deliver vehicles with SAE (Society of Automotive Engineers) Level 3 (partial automation) and potentially Level 4 (conditional automation) capabilities by 2020.

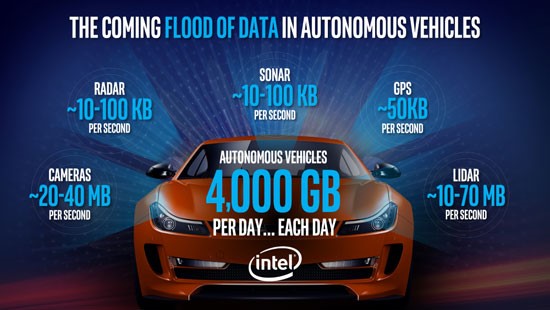

Although rapid advances in AI and DL algorithms are spearheading the transition to autonomous vehicles, this transformation would not be possible without the evolution of sensor fusion, which is largely taking place within the vehicle itself. Until now, the conventional embedded systems used in vehicle control applications processed sensor data on a distributed web of microprocessors, each associated with one, or a handful of sensors. In contrast, sensor fusion brings the raw data from the 60 – 100 sensors found on a typical car onto a single processing platform.

Tomorrow’s fully-autonomous vehicles are expected to employ 2X-4X more sensors, needed to support advanced functions like include ultra-precise vehicle location and complete awareness of its surrounding environment.

Making sense of the flood of data arriving at widely different rates and latencies requires the use of an onboard sensor fusion platform to perform a series of challenging tasks, beginning with co-registration of raw sensor data, low-level feature detection (edges and blobs), and identifying preliminary feature correspondences. The platform then associates the edge and blob features, and fuses them to create preliminary objects that are then analyzed by a succession of image understanding algorithms that extract the object shapes from the 2-D images and characterize their shape curvature and motion. As the system’s understanding of an object’s associated parts grows, it builds up a cohesive model of its overall characteristics.

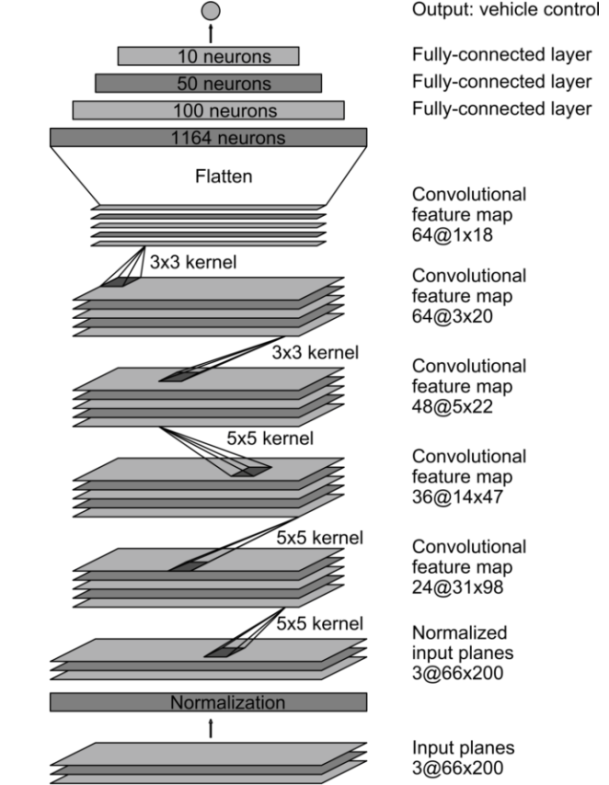

At the lower processing levels, edge and feature extraction algorithms, such as Convolution Neural Networks (CNNs) are among the most useful methods. Higher-level features (e.g., identifying the hood of a car) are created by associated various components, even when they show up as disparate segments in an image, due to image characteristics such as specularity. This is why sensor fusion is so important; it allows (for example) consistency in range and location to assist in associating image segments to create a single object that yet higher-level algorithms can label.

The higher-level processes, in particular, require the use of inference, an AI method explained earlier in this article. Although the AI system knows how the information gathered by various sensors can be fused to provide object identification and scene interpretation, it will never know in advance all the possible combinations of inputs that might occur. Inference solves this by creating the best interpretation of a new situation possible, from a set of already-learned situations.

Segmentation Strategies

Autonomous vehicle cost and performance can be dramatically affected by decisions about what processing tasks can (or must) be done onboard the vehicle itself, and what can be performed on the cloud, or pre-trained in a datacenter. Segmentation is often applied to inferential tasks because of their high computational requirements. Time-critical inference tasks should be performed on the mobile device, whenever they don’t exceed its processing or storage capacity. Other inferences that are less time-critical, or require a larger knowledge model may be shipped off to the cloud.

Likewise, some of the sensor fusion tasks in cars, drones, robots and other autonomously-mobile products can be done remotely, or efficiently handled by a pre-learned knowledge base, but most sensor fusion must be done onboard and in real time in a centralized hub on the vehicle or robot itself.

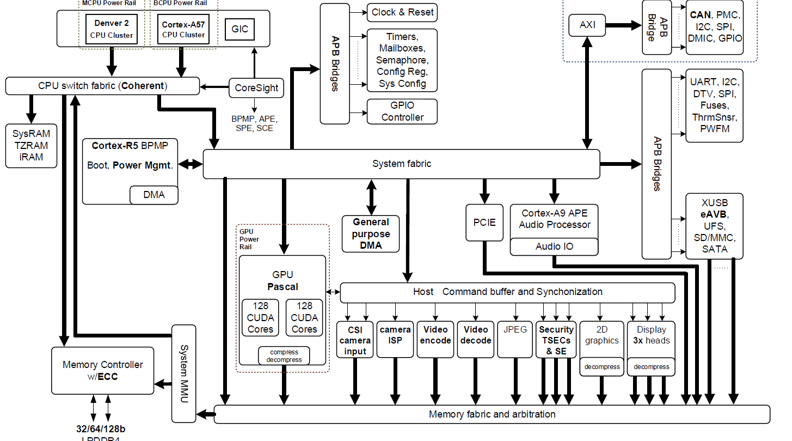

NVIDIA has addressed the issue of segmentation with a multi-pronged approach, developing both an onboard processor for AI-based sensor fusion and autonomous guidance and control, and an architecture (together with Microsoft) for a cloud-based AI data center, where the deployable GPUs can be trained, and where the extensive knowledge model can reside. The onboard processing system is based on the Xavier SoC mentioned earlier in this story. Billed as “the world’s 1st AI car superchip,” Xavier will be trained offline in a datacenter.

Some robots and other edge devices must operate independently of external computing resources. For these stand-alone applications, embedded GPU systems such as the dual-core Jetson TX2, can deliver the AI-level computing needed to support embedded deep learning and other high-throughput tasks such as image processing.

AI-Capable GPUs Enable Sensor Fusion

Since nearly all AI-enabled edge applications require some type of sensor fusion technology, many vendors are scrambling to deliver products and platforms to meet the demand. Three of the world’s leading chip makers (Intel, Qualcomm, and NVIDIA) and specialty-focused Mentor Graphics Corp. have recently released integrated sensor fusion platforms. These platforms differ from previous sensor-processing systems in two major ways: (1) they accept raw data from multiple sensors, and (2) use neural networks (often deep learning) and other AI algorithms to process and integrate it into a useable form.

Qualcomm’s Drive Data Platform is designed for easy insertion into the autonomous vehicle supply chain. Based on the Snapdragon 820, it makes extensive use of the Snapdragon Neural Processing Engine (SNPE) to process, fuse, and interpret multiple streams of imaging data generated by camera, radar, and LIDAR.

Meanwhile, Intel has made its own bid to gain traction in the vehicular sensor fusion platform market with the acquisition of Israeli-based Mobileye. Their 5th-generation System-on-a-Chip (SoC) is expected to be deployed in autonomous vehicles by 2020.

NVIDIA’s Drive PX2 platform is the product of a collaboration with Bosch, and is based on its forthcoming Xavier device.

AI for Home Security and Appliances

One of the other large markets anticipated for AI technology is in home security and home appliance devices. Toyota Research Institute, for example, is seeking to parlay what they learn about creating autonomous vehicles into household robots.

For these household-level edge devices, sensor fusion will be as important as it is in autonomous vehicles. It will also introduce some interesting new types of sensor modalities. For example, Audio Analytic has created a technology that can discern sounds that have “non-communicable intent” from both ambient noise as well as intentional sounds, such as speech and music.

This technology enables a sensor fusion AI system equipped with microphones to monitor a household, office or industrial building and identify any sounds that require an active response, such as crashing glass inside a home, or a siren heard outside a car, even when they are faint, or nearly drowned out by background noise. The system’s response could range from collecting more data to sounding an alert to driving a car to the side of the road and allowing an emergency vehicle to pass.

Robotics and Smart Manufacturing

AI and its associated technologies will also accelerate the evolution of the industrial robots that are already making inroads into the factory labor pool. Today, robots are primary used for automotive part and electrical component assembly, but this is expected to change as “cobots,” (short for collaborative robots) begin to work alongside humans. Cobots are already at work in Ford’s manufacturing plant in Germany, where they allow humans to focus their attention on non-routine tasks, such as customization of car orders.

AI technology is already changing how robots learn to perform complex tasks. One example is NVIDIA’s Isaac robot simulator that changes how robots learn to perform complex tasks. The AI-based software platform lets development teams train and test robots in highly realistic virtual environments. Once trained in the virtual environment, its knowledge can be imported to other models and variants of the original design.

AI Everywhere?

“AI everywhere” is becoming a common phrase as AI finds its way into applications as diverse as personal assistants, personalized recommendation apps in online vendors, home security, robotics, and smart manufacturing. Of all the technology evolutions over the past decade, AI is the one most likely to impact the greatest range of products and their design.

About the Author: Alianna J. Maren, Ph.D., is a scientist and inventor, with four artificial intelligence-based patents to her credit. She teaches AI and deep learning at Northwestern University’s School of Professional Studies, Master of Science in Predictive Analytics program. She is the senior author of the Handbook of Neural Computing Applications (Academic, 1990), and is working on a new book, Statistical Mechanics, Neural Networks, and Machine Learning. Her weekly blog, posted at www.aliannajmaren.com, addresses topics in neural networks, deep learning, and machine learning.

References:

- NVIDIA’s Isaac robot simulator (May 10, 2017): https://nvidianews.nvidia.com/news/nvidia-ushers-in-new-era-of-robotics-with-breakthroughs-making-it-easier-to-build-and-train-intelligent-machines

- Jensen Huang quote about AI is just the “modern way of doing software”: https://techcrunch.com/2017/05/05/ai-everywhere/

- NVIDIA’s Xavier (for autonomous vehicles): https://blogs.nvidia.com/blog/2016/09/28/xavier/

- NVIDIA’s Volta (for AI, inferencing): https://www.nvidia.com/en-us/data-center/volta-gpu-architecture/

- SAE (Society of Automotive Engineers) levels for automobile autonomy: https://www.sae.org/news/3544/

- Industry timeline for different SAE levels: https://venturebeat.com/2017/06/04/self-driving-car-timeline-for-11-top-automakers/

- Qualcomm (total volume of data per vehicle per day by 2020): https://www.qualcomm.com/solutions/automotive/drive-data-platform

- Mentor (total numbers of sensors per car, shift to a single FPGA-based sensor fusion processor): https://www.techdesignforums.com/blog/2017/04/06/autonomous-vehicle-drs360/

- Qualcomm (wireless as well as other factors): https://www.theregister.co.uk/2017/01/12/ces_2017_all_wireless_energies_are_channelled_to_the_connected_car/

- NVIDIA teams with Microsoft – HGX-1: https://nvidianews.nvidia.com/news/nvidia-and-microsoft-boost-ai-cloud-computing-with-launch-of-industry-standard-hyperscale-gpu-accelerator

- NVIDIA’s Xavier: 1st AI car superchip: https://blogs.nvidia.com/blog/2016/09/28/xavier/

- NVIDIA Jetson TX2: https://devblogs.nvidia.com/parallelforall/jetson-tx2-delivers-twice-intelligence-edge/

- NVIDIA Jetson TX2 specs: https://www.jetsonhacks.com/2017/03/14/nvidia-jetson-tx2-development-kit/tx1vstx2/

- More NVIDIA Jetson TX2 specs (tech details): https://elinux.org/Jetson_TX2

- Qualcomm acquisition of NXP Semiconductors: https://www.theregister.co.uk/2017/01/12/ces_2017_all_wireless_energies_are_channelled_to_the_connected_car/

- Intel acquisition of Mobileye: https://optics.org/news/8/3/19

- Mobileye – 5th gen chip: https://www.mobileye.com/our-technology/evolution-eyeq-chip/

- NVIDIA teams with Bosch: https://blogs.nvidia.com/blog/2017/03/16/bosch/

- Toyota’s Research Institute –home robotics: https://www.tri.global/about/

- Audio Analytic: https://www.audioanalytic.com/

- Smart manufacturing and robotics (Jan. 27, 2017 article): https://roboticsandautomationnews.com/2017/01/27/smart-factories-to-drive-industrial-robot-market-says-report/10998/

- Cobots allow workers to produce entire systems, not just a single components (May, 2016): https://news.nationalgeographic.com/2016/05/financial-times-meet-the-cobots-humans-robots-factories/

- Ford’s use of robotics – automobile manufacturing in Germany https://www.cnbc.com/2016/10/31/ford-uses-co-bots-and-factory-workers-at-its-cologne-fiesta-plant.html

- Robotics on the factory floor in Wisconsin (Aug. 5, 2017 article): https://www.chicagotribune.com/news/nationworld/midwest/ct-wisconsin-factory-robots-20170805-story.html

- Cobots allow manufacturers to create more custom parts (February 23, 2016): https://www.packagingdigest.com/robotics/heres-how-collaborative-robots-provide-custom-automation-cost-benefits-to-manufacturers-2016-02-23

- Customization via cobots (Jan. 13, 2017): https://www.ien.com/automation/article/20849060/collaborative-robots-are-showing-up-in-the-strangest-places