A Fibre Channel (FC) storage area network (SAN) and Inter-Switch-Link (ISL) interfaces are an important part of modern data-center systems, including in hyperscale or enterprise ones. FC SAN is a high-speed network that connects servers and storage devices, and the Inter Switch-Link joins and maintains the traffic flow between switches and routers.

A benefit to FC is its reliability. It typically runs faster per lane with lower latency than Ethernet or SAS interfaces. As a result, it has survived the competition for more than two decades

Typically, cool or cold-storage applications use hard disk drives (HDD) and tape libraries that are networked with FC connectivity. Newer IO interfaces and equipment — such as non-volatile memory express (NVMe), solid-state drive (SSD) storage devices — direct data through SAN systems and Fibre Channel links (such as 1x, 4x, or 8x). NVMEe is a communications interface and driver.

An updated FC-PI-8 specification for 128GFC will be released soon. It’s expected that the new, internal twin-axial cable assemblies will work with SFP 112, QSFP 112, QSFP-DD 112, or OSFP 112 receptacle port connectors — with the cable joined to a PCB connector near the controller chip or possibly directly to the chip packaging.

This would lower the cost of the blade board and the performance of the PCB substrate. It would also allow for a slightly longer direct-attach copper (DAC) cable option for Inter-Switch Link applications.

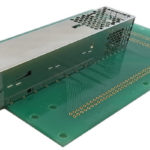

Check out the blue fill area in the below QLogic FC image as an SFP-based example. It represents various twin-axial cable assemblies with different termination options.

The next-generation Gen 8 FC SAN switch might have:

- 48 SFP 112 ports and eight QSFP 112;

- QSFP-DD 112 ports; or

- OSFP 112 ports.

The update could offer options, such as the ability to use internal twin-axial harnesses or cables. It could also offer a hybrid solution with longer, far-end port cabled links. Any of these features would significantly reduce the baseboard cost.

However, a thermal analysis would be required to validate the internal cable performance. For example, it’s necessary to determine and test an 85° C (185° F) or 125° (257° F) twin-axial cable and jacketing for each application, under the actual conditions.

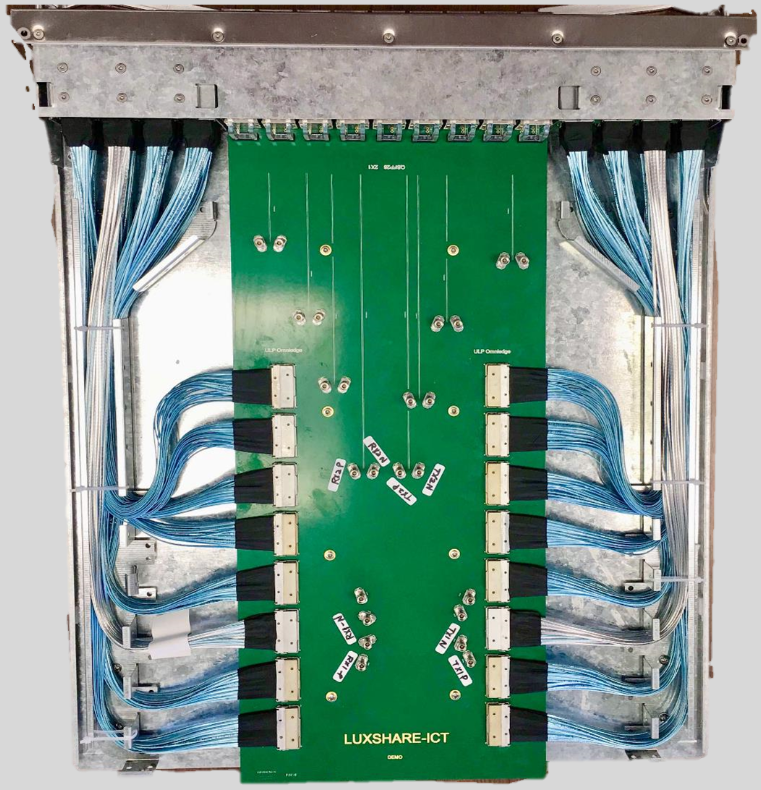

A typical layout is an above and below baseboard, with an internal twin-axial cable that’s routed from the port receptacle to either near or on the switch chip. Below is a demo box example, with a twin-axial cable that’s routed using an internal 56.1G PAM4 per pair, twin-axial harness or cable. This setup could work for Gen 8 FC SAN or Inter-Switch Links applications.

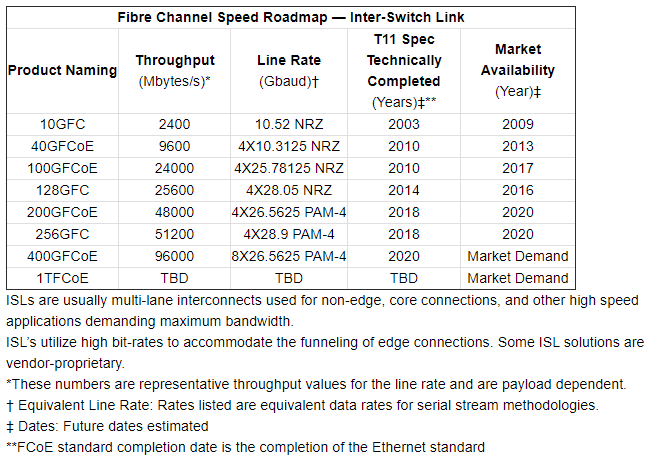

The FCIA’s speed rate roadmap shows the 128 GFC with a 56.1G line rate that’s of development, expected to be released over the next few years. The roadmap lists 2025 as the 256 GFC specification release date but provides no further timeframe. It’s likely the 256 GFC will take additional time to develop and validate.

The FC Inter-Switch Link roadmap indicates that the 400 GFCoE product, with eight lanes of 26.5625 (PAM4 modulation), is complete. New products are expected shortly. It’s likely the 800 GFCoE, with a possible 53G PAM4 per-line rate, will be added to the roadmap soon.

Currently, the FCIA’s FibreChannel over Ethernet roadmap covers the 2020 market for the 400 GFCoE. It’s reasonable to question where there is uncertainty or if this is the end of the roadmap for this application.

Newer FibreChannel QSFP and QSFP-DD pluggable optical modules might use the new Sumitomo Electric AirEB, Multi-fiber Connector instead of other more costly multi-fibre push on (MPO) types. More recent FC SFP pluggable optical modules might also use CN or SN connectors rather than the larger LC types.

Final thoughts

The Fibre Channel SAM has only experienced modest growth of about 16 million ports per year during the last couple of years. It only caters to a small market segment but holds steady as a critical interconnect product family for some suppliers.

Several storage-centric and enterprise datacenters still rely on the latest generation of FC SAN and related links. However, it’s possible that the newer GenZ interface and connectivity running at 112G PAM4 per lane could compete for the cool and cold-storage network applications in the future.

What’s clear is that the 256 GFC links must be developed and fully functional by 2023 or sooner. This is partially being dictated by the T11 and FCIA organizations.

It’s worth questioning: will the 128 or 256 GFC products become part of a datacenter’s switch radix topology? Will the SFP-DD modules, cables, and connectors be used for higher density port plate configurations? Or, perhaps, the QSFP-DD be used for eight-legged breakout external DAC cables or Inter-Switch Links.

As QSFP-DD and OSFP are considered for eight-lane trunk link applications, will new 16-lane OSFP XD be required for the next-generation FC applications? Will it work well under higher-heat conditions at 106G PAM4 and 112G PAM4? And what about for the 212G PAM4, 224G PAM4?

It would seem that the FC-PI-8 active-copper cables are useful for those longer-reach copper applications. It will be interesting to find out whether new system IO accelerators eventually replace Fibre Channel SAN or ISL applications in cold-storage data-center architecture.