Robots need more than just artificial intelligence (AI) to be autonomous. They need a lot of sensors, sensor fusion, and real-time inference at the edge. Now that the benefits of deep convolutional neural networks are well established, the need for more advanced data processing coming from lidar is pushing neural networks to new topologies to gain autonomy.

When the first robot was invented in the late 1950s to early 1960s, it wasn’t called a robot, but a “programmed article transfer device,” used in the GM production line to move products around die casting machines. The first words of the 1954 patent emphasized the programmability and versatility of the invention and indicated that the programmability required sensors to ensure compliance between the program—the desired trajectory or function—and the actual movement.

To this day, robots have not moved too far away from the original concept: today’s robots are programmable; they need to sense their environment in order to ensure compliance between what they do and what they are programmed to do; and they need to move within their environment. What has changed over the past 50-60 years is an increase in complexity, speed, and areas applying these basic concepts.

While the first robots were mostly involved in moving die-casting parts, the father of robotics, Joseph Engelberger, was heavily influenced by Asimov’s first law of robotics: A robot may not injure a human being or, through inaction, allow a human being to come to harm. He deployed robots in areas where they could protect humans. Protecting humans has also been the driving force behind the always-increasing number of sensors, particularly in collaborative robots (cobots) or autonomous guided vehicles (AGVs).

What Is Driving the Robotics Industry?

To better understand the quest for autonomous robots, let’s review to the “law of intelligence” equation from Alex Wissner-Gross, which is an entropic force that explains the trend in robotics:

F = T ∇ Sτ (1)

Where F is the force which maximizes the future freedom of action, T is the temperature, that defines the overall strength (available resources), and S is the entropy within the time horizon tau.

Robotics as an industry and a science aims to maximize the future freedom of robot actions by increasing embedded analog intelligence. This would entail:

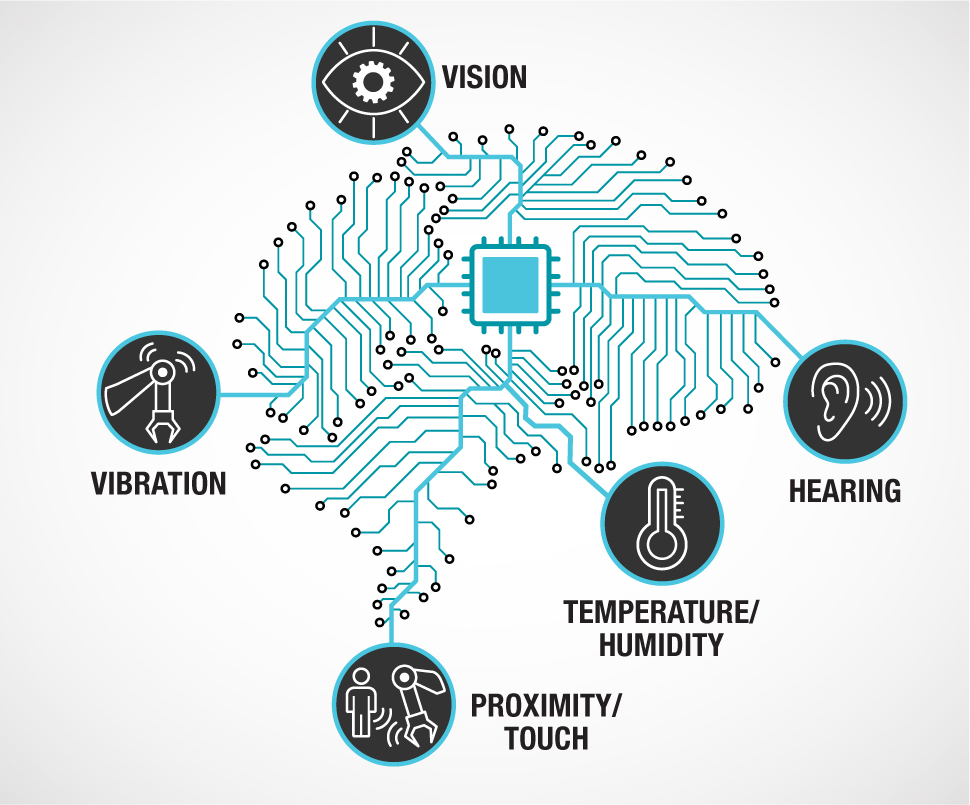

- More sensors for a higher accuracy model of the robots’ surroundings.

- Better sensor interconnections to the control algorithms (and more decentralized control algorithms).

- Better algorithms to extract the highest amount of information possible from sensor data.

- Better actuators to act faster and more accurately according to the control algorithms’ decisions.

If you look at today’s technology landscape, robots have gained a lot of autonomy and are using sensors from complementary metal-oxide semiconductor camera sensors, lidar, and radar to accommodate a wide variety of applications. While cameras have much greater angular resolution and dynamic range than radar, they cannot provide the dynamic range that lidar has, nor do cameras work in smoky or dusty environments.

Since robots are designed to be the most flexible option to accommodate the broadest range of applications, they need to operate in low-light, dusty, or brightly lit environments. This flexibility is possible by combining sensor information—aka, sensor fusion. In other words, information from different sensors can be used to reconstruct a resilient representation of the robot’s environment, enabling autonomy in many more applications. For example, if a camera is briefly covered, the other sensors must be able to enable the robot to operate safely. To ensure that a robot can have 360-degree knowledge of its environment, the robot sensor data needs to be routed in a time-critical manner and with a low number of cables to the robot controller to maximize reliability of the connection.

Today, high-bandwidth low-latency buses are mostly based on low-voltage differential signaling (LVDS). However, there is no standard for LVDS interfaces, which yields to a fragmentation of the sensor-to-controller ecosystem and makes mixing and matching solutions from different vendors difficult. Once the sensor data has been transferred to the robot controller, a broad range of new machine learning algorithms based on deep neural networks can help improve the accuracy of a robot’s surroundings. In the words of the deep learning godfathers, Yann LeCun, Yoshua Bengio, and Geoffrey Hinton, “Deep learning allows computational models that are composed of multiple processing layers to learn representations of data with multiple levels of abstraction.” These deep neural networks can be used either inside the robot for fast, real-time processing or in the cloud for meta-information gathering or more complex inference.

For most robots, inference at the edge is a key parameter to ensure that the robot can react quickly to changes in its environment, thanks to the inherent low latency allowed by edge processing. Edge inference can be leveraged for convolutional neural networks and similar neural network topologies for image classification or predictive maintenance estimation, a deep Q-network for robot path planning, or for custom neural networks designed to address a specific class of problems.

Looking at the Near Future

In the near future, it seems unlikely that the sensors will change much, but the processing involved will be different. Imaging sensors might be hyperspectral or offer higher resolution. Lidar may have higher wavelengths to be safer and offer longer range. Radar sensors may offer more integrated antennas, but these will not be significant changes. What will change is how the information is used and aggregated.

For instance, on the sensor hub, the introduction of single-pair Ethernet (aka T1) and Power over Data Lines (Institute of Electrical and Electronics Engineers 802.3bu-2016) will simplify the design of sensor hub interfaces, allowing a wider mix of sensors and standardizing power distribution. On the control side, reinforced learning will be enhanced by recent breakthroughs addressing challenges such as the high cost of learning from all possible failures, and the penalty of learning the wrong behavior due to a skew in the learning pattern.

On the classification side, most of the approaches based on convolution neural networks do not fully extract all of the 3D information from lidar-provided voxels. Next-generation deep neural networks will leverage recent progress in non-Euclidean machine learning (or geometric machine learning) as offered in frameworks such as PointNet, ShapeNet, Splatnet, and Voxnet. Inference at the edge and sensor fusion will merge in what I see as hierarchical inferences from multiple sensor sources. This is where data will go through simpler inference networks for faster loop reactions, such as current control neural networks, to improve performances over existing proportional-integral-derivative networks, all the way to more complex long short-term memory networks capable of giving predictive maintenance diagnosis while in the middle position. Neural networks will be able to compensate for slight inaccuracies of the mechanics in a robot and offer higher position accuracy and smoother motion.

Summary

The autonomous robot evolution is a constantly moving target. When George Devol applied for his patent in 1954, the machine was clearly more autonomous than any cam-based or human-operated machines of that time. By today’s standards, that would be a very rigid setup and wouldn’t even rank on the autonomous scale. This drastic change is likely to happen again before we know it.

Robots on wheels and cobots are now considered to be at the edge of autonomy, have the capability of slowing down when humans get near them, and can avoid hitting humans even when they are moving. With the rapid changes in embedded analog intelligence, these ‘at the edge’ innovative robots will not be considered autonomous in the near future, because of how quickly the industry is evolving and producing new technologies that make robotics more autonomous than ever before.