Astera Labs announced its new Leo Memory Accelerator Platform for Compute Express Link (CXL) 1.1/2.0 interconnects to enable robust disaggregated memory pooling and expansion for processors, workload accelerators, and smart I/O devices. Leo overcomes processor memory bandwidth bottlenecks and capacity limitations while offering built-in fleet management and deep diagnostic capabilities critical for large-scale enterprise and cloud server deployments.

Astera Labs announced its new Leo Memory Accelerator Platform for Compute Express Link (CXL) 1.1/2.0 interconnects to enable robust disaggregated memory pooling and expansion for processors, workload accelerators, and smart I/O devices. Leo overcomes processor memory bandwidth bottlenecks and capacity limitations while offering built-in fleet management and deep diagnostic capabilities critical for large-scale enterprise and cloud server deployments.

“CXL is a true game-changer for hyperscale data centers, enabling memory expansion and pooling capabilities to support a new era of data-centric and composable compute infrastructure,” said Jitendra Mohan, CEO, Astera labs. “We have developed the Leo SoC platform in lockstep with leading processor vendors, system OEMs, and strategic cloud customers to unleash the next generation of memory interconnect solutions.”

CXL is a foundational standard and is proving to be a critical enabler to realize the vision of AI in the cloud. Astera Labs is a proud contributor to this exciting technology and is working with key industry leaders developing the CXL technology to accelerate the development and deployment of a robust ecosystem.

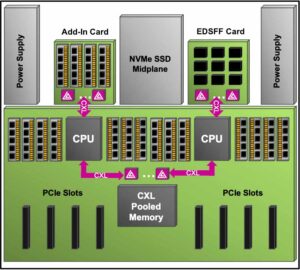

As the industry’s first CXL SoC solution to implement the CXL.memory (CXL.mem) protocol, the Leo CXL Memory Accelerator Platform allows a CPU to access and manage CXL-attached DRAM and persistent memory, enabling the efficient utilization of centralized memory resources at scale without impacting performance.

The Leo Platform of ICs and hardware increases overall memory bandwidth by 32 GT/s per lane and capacity up to 2TB, maintains ultra-low latency, and provides server class RAS features for robust and reliable cloud scale operation.