A large group of researchers at Imperial College London, the University of Edinburgh, the University of Manchester, and Stanford University have recently collaborated on a project exploring the application of real-time localization and mapping tools for robotics, autonomous vehicles, virtual reality (VR) and augmented reality (AR). Their paper, published on arXiv and Proceedings of the IEEE, outlines the development of methods to evaluate simultaneous localization and mapping (SLAM) algorithms, as well as a number of other interesting tools.

“The objective of our work was to bring expert researchers from computer vision, hardware and compiler communities together to build future systems for robotics, VR/AR, and the Internet of Things (IoT),” the researchers told Tech Xplore in an email. “We wanted to build robust computer vision systems that are able to perceive the world at very low power budget but with desired accuracy; we are interested in the perception per Joule metric.”

The researchers involved in the project combined their skills and expertise to assemble algorithms, architectures, tools, and software necessary to deliver SLAM. Their findings could aid those applying SLAM in a variety of fields to select and configure algorithms and hardware that can achieve optimal levels of performance, accuracy, and energy consumption.

“An important point in the project is the idea of interdisciplinary research: Bringing experts from different fields together can enable findings that would not be possible otherwise,” the researchers said.

SLAM algorithms are methods that can construct or update a map of an unknown environment while keeping track of a particular agent’s location within it. This technology can have useful applications in a number of fields, for instance in the development of autonomous vehicles, robotics, VR, and AR.

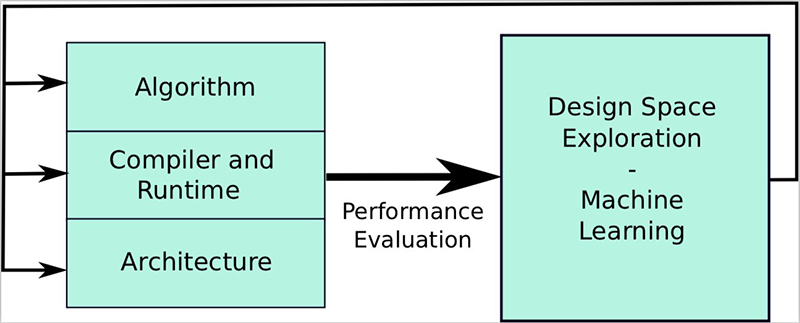

In their study, the researchers developed and evaluated several tools, including compiler and runtime software systems, as well as hardware architectures and computer vision algorithms for SLAM. For instance, they developed benchmarking tools that allowed them to select a proper dataset and use it to evaluate SLAM algorithms.

For instance, they used an application called SLAMBench to evaluate the KinectFusion algorithm on several hardware platforms and SLAMBench2 to compare different SLAM algorithms. The researchers also extended the KinectFusion algorithm, so that it can be used in robotic path planning and navigation algorithms; mapping both occupied and free space in the environment.

“This project was really broad, thus, findings were quite numerous,” the researchers said. “For example, we have shown practical applications where approximate computing can play a strong role in achieving perception per Joule, for instance the SLAMBench application developed for smart-phones. Approximate computing is the idea to complete a computation task with a given acceptable error, and so produce an approximate solution.”

The project explored the use of new sensing technologies, such as focal-plane sensor-processor arrays, which were found to have low power consumption and high frame rates. In addition, it investigated the application of static, dynamic, and hybrid program scheduling approaches on multicore systems, particularly for the KinectFusion algorithm.

“Our research is already having an impact on many fields such as robotics, VR/AR, and IoT, where machines are always-on and are able to communicate and perform their tasks with reasonable accuracy, without interruptions, at very little power consumption,” the researchers said.

This comprehensive project has led to several important findings, and to the development of new tools that could largely facilitate the implementation of SLAM in robotics, VR, AR, and autonomous vehicles.

The study also made a number of contributions in the context of hardware design, for instance, developing profiling tools to locate and evaluate performance bottlenecks in both native and managed applications. The researchers presented a full workflow for creating hardware for computer vision applications, which could be applied to future platforms.

“We will now use our findings to build an integrated system for robotics and VR/AR,” the researchers said. “For instance, Dr. Luigi Nardi at Stanford University is continuing his research by applying similar concepts to Deep Neural Networks (DNN), i.e. optimizing hardware and software to run DNN efficiently, while Dr. Sajad Saeedi at Imperial College London is looking at alternative analogue technologies such as focal-plane sensor-processor arrays (FPSPs) that allows DNN run at very high frame-rates, order of 1000s FPS, for always-on devices and autonomous cars.”