5G took some fifteen years to develop, starting around 2004 at university research labs. When 3GPP Release 15, the first 5G new radio (5GNR) standard, was approved in late 2017, the race to deploy began. That includes mmWave Frequencies starting around 24 GHz. At roughly the same time, research labs began looking at frequencies above 100 GHz in what might become 6G. Indeed, the second 6G Summit is coming in March 2020.

Several universities around the world have begun looking at frequencies from 100 GHz to 300 GHz as the basis for 6G. While 6G is 12 to 15 years away, we know that despite the bandwidth, latency, and reliability advances of 5G, we will eventually need still better and faster communications.

Researchers at the University of California Berkeley, Santa Barbara, and San Diego are heavily involved in 6G research. The UC Santa Barbara’s ComSenTer (Communications Sensing TeraHertz), has researchers in California and at other universities looking at ICs, circuits, and systems for 6G. 5TGW spoke with ComSenTer Director Prof. Mark Rodwell of UCSB and Co-Director Prof. Ali Niknejad of UC Berkeley to discuss the current state of 6G research.

Prof. Ali Niknejad

UC Berkeley

5GTW: When did you realize that it was possible to develop circuits that operated at frequencies higher than those allocated for 5G?

Niknejad: Let me start with 5G. We started some 19 years ago at Berklee looking at CMOS technology. We saw the trend lines. We saw the technology crossing 100 GHz. We could certainly build circuits at 60 GHz. We started a measurement-based modeling methodology for transistors and passives. At ISSCC 2004, we demonstrated 60 GHz building blocks using 130 nm technology. We kept working on CMOS 60 GHz devices such as phased arrays, and power amplifiers. We were the first to jump on the mmWave craze, a few years before the standards committees started talking about it. We in the research community thought it sounded crazy to use mmWave for mobile communication. We had an arrangement with Samsung to run the numbers and look at the feasibility. Samsung played an instrumental role in using mmWaves. That’s the 5G story.

As for 6G, we found that the 5G technology kept getting better and better? We started looking at performance above 100 GHz a few years ago, before creating the ComSenTer. We did a CMOS 240 GHz communication link to prove that the technology could work. You can communicate at tens of gigabits per second. We were constrained to CMOS at the time. We could see that CMOS limited us in data rates and frequencies above 200 GHz. We at UC Berkeley came together with Mark and formed the ComSenTer with the goal to push device performance beyond 100 GHz with operating distances in hundreds of meters.

We also looked at the system side, not just designing circuits in isolation, but looking at the entire network. What will it look like? What’s the network architecture? That why we brought in NYU Wireless and Sundeep Rangan.

Prof. Mark Rodwell

UC Santa Barbara

5GTW: When did UC Santa Barbara begin looking at 100 GHz?

Rodwell: UC Santa Barbara was unusual in that it committed to semiconductors back in the 1980s when other universities were cutting back. Since the 1990s, I’ve been working on high-frequency transistors, not in silicon but in other materials. We built our first 100 GHz amplifier in 1993. We moved to a couple hundred gigahertz in 1998 or so. It was akin to “let’s put a man on the moon because we can, not because we’d want to live there.”

There are a couple of Doppler programs in the early 1990s where we really pushed this. We wanted to push high frequency electronics. It started with “can we build a transistor that could run at more than 1 THz? Can we make the whole chipset and figure out packaging?” We built a transistor that you handle 1.2 THz while the Northrop people build an amplifier that operated up to 1.0 THz. My team at Teledyne in Santa Barbara built devices only up to 650 GHz but with more complexity. So, we proved that such frequencies were possible but at such high cost, what could you do with it?

With the evolution of CMOS, electronics became much less expensive. There were some limits on power for longer range. So, we at ComSenTer looked for useful things that we could do with the technology. At that point, we decided rather than continuing to push for higher speeds, let’s build something more complex and useful at these frequencies to drive real applications. “Use CMOS whenever you can” is a rule we tried to live by, but when you can’t use CMOS, use SiGe. Beyond that, use InP. While I’m really an InP person, GaN is becoming a mainstream technology. It can produce phenomenal amounts of output power.

5GTW: Why did ComSenTer researchers choose to study frequencies from 100 GHz to 300 GHz?

Rodwell: We chose to start at 100 GHz because there’s so much good work already done below 100 GHz. We’re being funded to look at technologies that are a few more years out. We’re limiting work to 300 GHz because atmospheric conditions make higher frequencies miserable. Unless you’re the Air Force or NASA, building links above 300 GHz is fantasy. Even going above 150 GHz has been questioned. How many nines of reliability can you tolerate? Under commercial service constraints, to get four nines reliability, from DC to about 300 GHz, the dominant worst-case weather condition is rain. But, that attenuation levels off when the signal wavelength equals the raindrop size. That’s at about 50 GHz. Once you reach 50 GHz, the atmospheric attenuation doesn’t get worse. The question then becomes “can you build inexpensive electronics at 150 GHz?” You get large bandwidth and because the wavelengths are short, you can build an antenna array with lots of elements in a small space. An array can mathematically produce as many beams as it has antennas, though the signal processing people say divide that number by two. At 140 GHz, wavelength is 2 mm so element spacing is 1 mm. Thus, a 1 cm2 array can have 100 elements. If you can get 100 beams, then you can use the allocated spectrum 100 times over.

5GTW: What are the drawbacks?

Rodwell: The penalty, however, is you get very short range, not only because of atmospheric attenuation but because of λ2/R2. Our vision is to get complex electronics and use as much CMOS as we can. The output transistor is not likely CMOS because of output power. It’s likely SiGe, GaN, or InP.

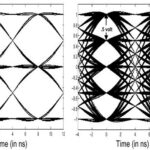

Niknejad: When you go above 100 GHz, you have lots of bandwidth. You can easily build a system with 10 GHz of bandwidth and you can push a lot of data. We were able to push 80 Gbits/s over a single channel link. When you have that much data and you want to further increase capacity with a MIMO system, we think we can get to terabit/s data transfer. When you get to that high data rate using spatial multiplexing, how do you process that data? The problem of taking all that data, quantizing it, processing it, shipping creates many problems. For example, you’re limited by ADC power, DAC power, analog signal processing, digital signal processing, and high-speed links. That doesn’t even account for all the DSP you have to do.

Rodwell: In addition to the high-speed circuits, we realize that the challenge comes in doing the back-end work of signal processing. The other problem is “how do we package all of this?”

Niknejad: The downside of using these high frequencies is that the signals don’t bend around corners. Most propagation is line of sight. You can have an outage problem. We start by measuring channels both indoor and outdoor, look at short and long ranges. What kind of delay spread is there, how much signal processing we need. How do we deal with the fact that the channel might be line of sight? The network handoff problem is important in 5G and will be more important in 6G. You can walk in front of a tree and lose the connection. The system won’t be robust unless you are connected to multiple base stations at one time. Tracking users and beam steering is much more difficult above 100 GHz. At 5 GHz, the beams are wide. You can track a user packet by packet. At these high frequencies, the signal is very weak without a beam. A base is basically blind. You don’t see the user locations. We need a clever scheme to quickly figure out which direction to send the information. You might have to do beam steering in both directions.

In 5G, a handset might have four antenna elements. A chipset can drive perhaps twelve antennas but only four at a time. The base stations are doing the heavy lifting of driving say 64 elements.

In our system, you can put more elements in a handset because of the shorter wavelengths. The problem of tracking gets more difficult because of the number of angles to search to find a user. It’s now gone up squared.

Rodwell: As far as back-end digital beamforming, it’s been a wonderful problem. Besides, Ali, and Elad Alon at Berkeley, we’ve got help solving these problems from Sundeep Rangan at NYU and Christoph Studer at Cornell. The first time we gave these talks about massive MIMO, people stood up and said “you will need massive control. Phase angles will kill you.” A few months later, we did some calculations. There are a lot if signals coming in and phase errors will produce a lot of crosstalk. But, you also have a lot of elements in your antenna array, which means you have lots of oscillators with their own random errors that sort of average out. When you do the calculations, the phase noise problem doesn’t look so scary. We did the sam calculations on the ADC resolution. We went back and forth on how many ADC bits we needed, but overall, it’s not as scary as we thought. We saw the same on linear dynamic range and third-order intermodulation distortion and gain compression.

As for MIMO, it’s very different at short wavelengths than at lower frequency. It’s much more beam like and so the number of paths between transmitter and receiver is less. In signal-processing terms, the channel is “more sparse.” Computationally, sorting out the channel turns out to be much less mathematically complicated. The ComSenTer has some efficient Matlab algorithms. If we map that to silicon, do we have something that can support 100 GHz and 100,000 cell phone conversations? That’s 10 Gbps. The answer isn’t there yet, but it’s exciting. The complexity of these problems is looking less extreme than we once thought.

5GTW: How many digital beamforming channels can you support and at what data rate?

Think of it this way: If I have a hundred elements on a phased array antenna, I’ve got a matrix. Beamforming is inverting that matrix. If we were not using mmWaves and were using lasers, I’d simply look to see your location. The communication channel becomes a straight line. In that case, the problem of beamforming becomes a problem of imaging, which is computationally simple. As wavelengths get shorter, the problem becomes one of imaging, making the DSP less complicated.

One thing we have going in our favor is that there are plenty or test instruments on the market that can operate at 110 GHz to 170 GHz. The number is too large to explain in terms of sales to universities. We picked 140 GHz, 220 GHz, and 300 GHz as frequencies to research. There are industry people looking at 110 GHz to 170 GHz. So, the lower end of this frequency range isn’t as far away as people might think.