Each sensor type, or modality, has inherent strengths and weaknesses. Sensor fusion is the process of bringing together inputs from multiple sensors to form a single model or image of the environment around a platform. The resulting model is more accurate because it balances the strengths of the various sensors. Sensor fusion brings the data from a heterogeneous set of sensor modalities together and uses software algorithms to provide a more comprehensive, and therefore accurate, environmental model.

This FAQ reviews the basics of sensor fusion, looks at an example of using machine learning to optimize sensor fusion algorithms, considers the use of sensor fusion in industrial internet of things (IIoT) applications, and closes with a look at standardization efforts related to sensor fusion. Subsequent FAQs in this series will review sensor fusion levels and architectures, the use of sensor fusion in robots, and finally, an overview of sensor fusion use in land, air, and sea vehicles.

While the ultimate goal of sensor fusion is to provide more accurate and comprehensive models of the environment around a platform, it can also serve several other functions:

- Resolution of contradictions between sensors

- Synchronization of sensor outputs, for example, by accounting for the short time differences between measurements obtained from multiple sensors.

- Using heterogeneous sensor systems to provide an output that is greater than the sum of the inputs.

- Identifying potential sensor failures by detecting if one sensor consistently produces outputs that are unlikely to be correct compared with other sensors.

Sensor fusion can be used to create a 9-axis orientation solution, enabling autonomous platforms to make more informed decisions that support more intelligent actions. Combining a gyroscope, magnetometer and accelerator can provide all the benefits of each sensor modality while compensating for their respective weaknesses:

- The gyroscope tracks instantaneous heading, pitch, and roll, and it is not influenced by lateral acceleration, vibration, or changing magnetic fields. Still, it does not have an absolute reference and can drift over time.

- The accelerometer tracks the direction of gravity, while the magnetometer tracks the direction of the Earth’s magnetic field. Both have an absolute long-term reference but are susceptible to acceleration, vibration, and interference from changing fields.

Sensor fusion is a complex process of gathering, filtering, and aggregating sensor data to support the environmental perception needed for intelligent decision making:

- The first level consists of the raw input data collected from various sensors and sensor clusters.

- Level two includes the processes of filtering, temporal and spatial synchronization of sensor data, and uncertainty modeling comparing various sensor outputs.

- Object detection and feature extraction to generate representations of objects such as sizes, shapes, colors, speeds, and so on occur at level three.

- Level four aggregates the inputs from level three to identify specific objects and their trajectories (or anticipated trajectories) to create an accurate dynamic model of the environment.

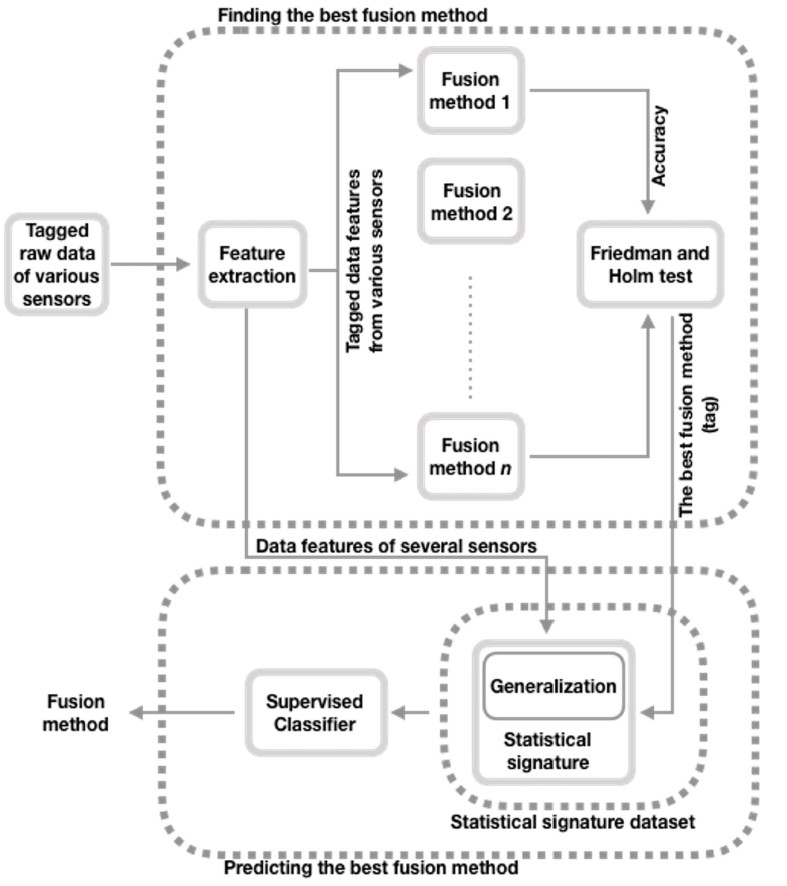

Extraction and aggregation of features are critical activities for successful sensor fusion. Machine learning (ML) algorithms are being developed to compare various fusion methodologies and identify the optimal solution for a given group of sensors in a specific application. In one case, ML employs:

- Friedman’s rank test, a non-parametric statistical test, to detect differences in the accuracy of outputs across multiple sensor fusion methods and

- Holm’s test is used to identify the probability that one or more false positives have occurred by adjusting the rejection criteria for each of the individual hypotheses.

Those tests can be used to identify significant differences, in terms of accuracy, between raw extraction and aggregation (defined as the baseline for comparison) and other more complex fusion algorithms such as various forms of voting, multi-view stacking, and AdaBoost methodologies.

IIoT sensor fusion

Sensor fusion is expected to be a key factor to maximize the utility of the IIoT. Sensor fusion can provide the comprehensive real-time operational data needed to avoid unexpected maintenance and unplanned downtime. One of the uses of sensor fusion is to provide context for measurements. For example, monitoring equipment temperature without also monitoring the temperature in the ambient environment could lead to a flawed result.

Combining a vibration sensor with a speed sensor can provide enhanced information about the condition of motors and gearboxes. Correlating the vibration and speed data could identify a shaft misalignment or bearing wear that could not be identified using a single sensor.

Even more complex sets of sensors can be used simultaneously to analyze multiple operational parameters. As a result, IIoT sensors are increasingly co-packaged with two, three, or even four different sensors in a single package. Co-packaging of multiple sensors reduces system complexity and cost while multiplying the benefits from sensor fusion. A growing range of IoT applications, including wearables, medical devices, drones, white goods, industrial systems, and transportation, are employing co-packaged sensors to monitor pressure, temperature, force, vibration, and more.

Sensor fusion standards

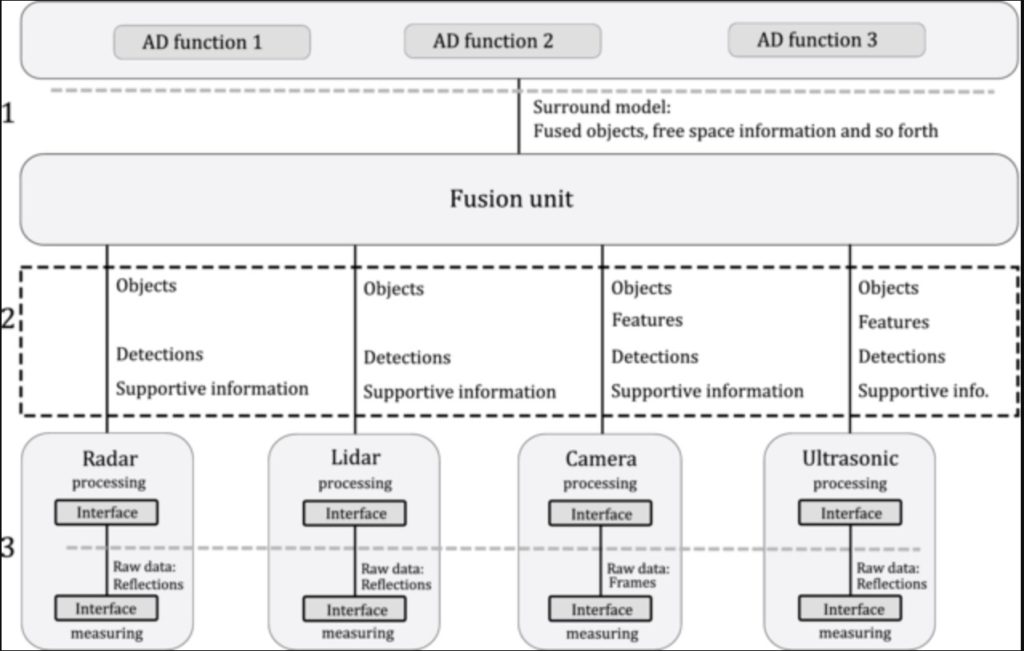

Sensor fusion standards are just beginning to appear. The following are two examples, one for in-vehicle interfaces between sensors and the sensor fusion unit and two others for the development of ad-hoc sensor networks to fill the gap between traditional sensors such as radars, LIDARs, and cameras and infrastructure-based communication networks.

A standardized logical interface layer between the various sensors and the sensor fusion function is needed to maximize the reusability of various sensor fusion applications and to minimize the development time needed for the sensor/fusion communication link(s). That’s the goal of ISO 23150:2021. The standard specifies the logical interface between the fusion unit that interprets the scene around vehicles using a surround fusion model and in-vehicle environmental perception sensors such as radar, lidar, camera, and ultrasonic. The interface is described in a modular and semantic representation. It provides information on object level (for example, potentially moving objects, road objects, static objects) as well as information on feature and detection levels based on sensor technology-specific information. ISO 23150:2021 is focused on interface level 2:

- Interface level 1 is the highest logical interface and connects the fusion unit and the automated driving functions.

- Interface level 2 is the middle logical interface and connects sensors and sensor clusters with the fusion unit (this is the focus of ISO 23150:2021.)

- Interface level 3 is the interface layer for the raw data level of a sensing element.

ISO 23150:2021 defines the interface level 2 data communication between sensors and data fusion unit for automated driving functions (Image: International Standards Organization)

ISO 23150:2021 defines the interface level 2 data communication between sensors and data fusion unit for automated driving functions (Image: International Standards Organization)

Two communications standards are emerging to enhance vehicle sensor networks; the intelligent transport system G5 (ITS-G5) and the cellular V2X (C-V2X) platform. The ITS-G5 has been adopted by several automakers, while C-V2X has gained momentum with road infrastructure owner-operators and some carmakers.

ITS-G5 was developed jointly by the CAR 2 CAR Consortium and the European Telecommunications Standard Institute (ETSI). It employs dedicated short-range communications (DSRC) technology that enables vehicles to communicate directly and with other road users without cellular or other infrastructure. ITS-G5 is an ad-hoc sensor network that connects on-vehicle sensors such as cameras and radars with infrastructure-based communication networks.

Also designed for direct communication between vehicles and their surroundings, C-V2X is the newer of the two technologies. It is defined by 3GPP based on cellular modem technology, leading to a fundamentally different non-interoperable access layer with DSRC. Both ITS-G5 and C-V2X address the same use cases, and some efforts are underway to develop interoperable solutions.

Summary

Sensor fusion is an increasingly important technology across various applications from health care to automated vehicles, industrial systems, and even white goods. It can bring together the measurements from multiple types of sensors to form a more complete image of the operation of a piece of equipment, or of the environment surrounding an automated vehicle. While sensor fusion is not new, there are recent efforts to apply ML to develop ongoing improvements to the operation of sensor fusion systems. And, there are standardization efforts underway to enable the interoperability of sensor fusion systems across multiple platforms, further amplifying the benefits of the technology. The next FAQ reviews the various levels of sensor fusion and sensor fusion architecture choices, including how they can be structured for IoT applications.

References

Choosing the Best Sensor Fusion Method: A Machine-Learning Approach, MDPI

DSRC vs. C-V2X for Safety Applications, Autotalks

ISO 23150:2021, ISO

Sensor fusion, Wikipedia

Inertial sensors for position and attitude control using advanced sensor fusion algorithms, STMicroelectronics