Sensor fusion is the process of combining inputs from two or more sensors to produce a more complete, accurate, and dependable picture of the environment, especially in dynamic settings. The goal of sensor fusion is to provide those improved results with the minimum number of sensors and minimum system complexity for the lowest cost. The previous FAQ in this series reviewed the basics of sensor fusion. This FAQ dives deeper into the various levels of sensor fusion and looks at different architectures used for sensor fusion systems.

At the most basic level, sensor fusion is categorized as centralized or decentralized by the type of data being used, raw data from sensors, features extracted from sensor data, and decisions made using the extracted features and other information. Depending upon the implementation, sensor fusion can offer several benefits:

- Increased data quality

- Increased data reliability

- Estimation of unmeasured states

- Increased coverage areas

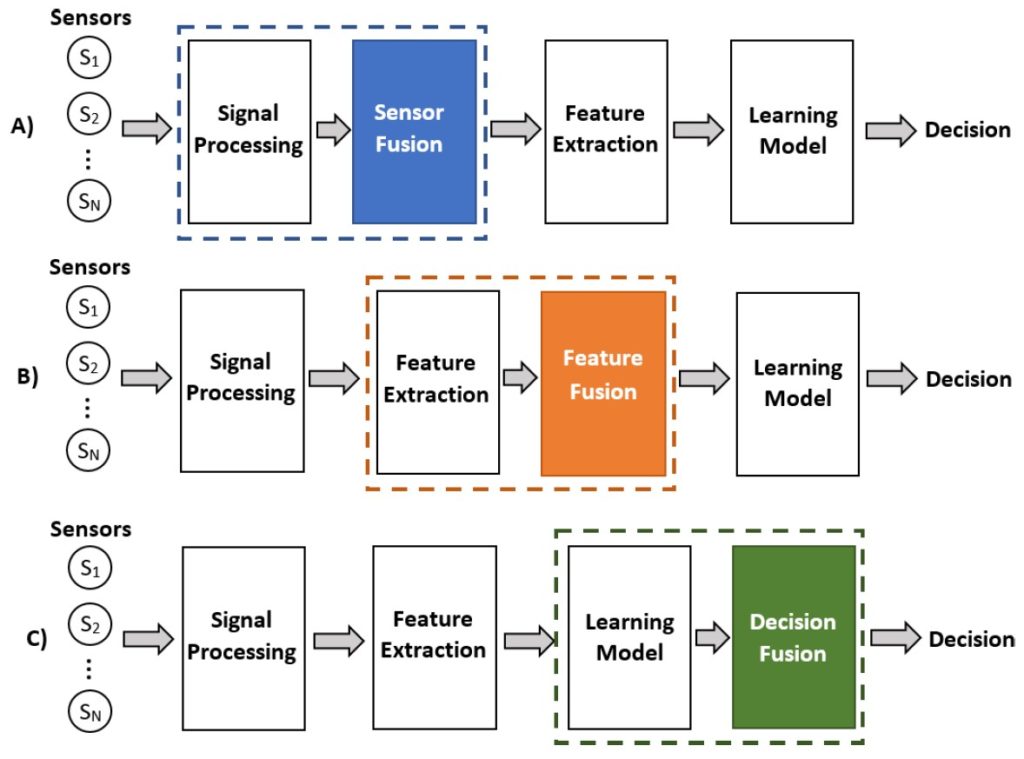

Sensor fusion is a form of data fusion and uses similar statistical, probabilistic, knowledge-based, and inference/reasoning methodologies. Covariance, cross variance, and other statistical approaches can be used. Probabilistic methodologies include Kalman filtering, maximum likelihood estimation, Bayesian networks, and so on. Artificial neural networks, fuzzy logic, and machine learning algorithms are knowledge-based reasoning and inference methods. Sensor fusion typically includes three levels of abstractions:

- Sensor-level abstraction processes the raw sensor data. If multiple sensors are used to measure the same physical attribute, the data can be combined at this level. For sensors measuring different attributes, the data is combined at a higher level.

- Feature level abstraction extracts features from various independent sensors to produce individual feature vector representations.

- Decision level abstraction classifies the various features and uses the resulting data to make decisions about the environment and identify any necessary actions that need to be carried out.

Each data/sensor fusion paradigm (statistical, probabilistic, and knowledge-based) can be used at different levels of processing abstractions. In addition to these three basic levels, hybrid models can be implemented. For example, data from two different sensors can be combined to produce a single feature set and the resulting classification model used at the decision level. Or, the results of feature extractions and decision level classifications of multiple modalities can be used for training to refine the decision level classification algorithms for other modalities.

Sensor source classifications

Sensor relationships and sensor fusion architectures can be classified based on the relationships between multiple sensors in a system:

Complementary sensors provide information that represents different aspects of the environment and can be combined to produce more complete global information. In a complementary implementation, the sensors operate independently and do not directly depend on each other but can be combined to give a more complete image of the phenomenon under observation. For example, combining information from a rotational speed sensor and a vibration sensor can provide enhanced information about the condition of motors and gearboxes. Or, in the case of vision systems, images of the same object from two different cameras or a camera and LIDAR sensor can be combined to provide a more complete “picture” of the environment.

Redundant or competitive sensors are used to provide information about the same target, and their outputs are combined with increasing the reliability or confidence of the output. For example, if the field of view of two cameras overlaps, the overlapping area is classified as redundant sensing. In the case of competitive sensing, each sensor measures the same property; in the case of two cameras, both would have the same field of view. There are two cases of competitive configurations: fusion of data from different sensors; or fusion of data from a single sensor measured at different points in time. A special case of competitive sensor fusion can be used when monitoring critical parameters, called fault-tolerant fusion. Fault-tolerant designs are typically based on modular designs such as N+1 redundant architectures.

Cooperative sensor fusion combines the inputs from multiple sensor modalities, such as audio and visual, to produce more complex information than the individual inputs. Combining two cameras with different viewpoints can be used to synthesize a three-dimensional representation of the environment. Cooperative sensor fusion is complex, and the results are sensitive to the accumulated accuracies in all the included sensors. While competitive sensor fusion can increase accuracy and reliability, cooperative sensor fusion can decrease accuracy and reliability.

The six levels of sensor fusion

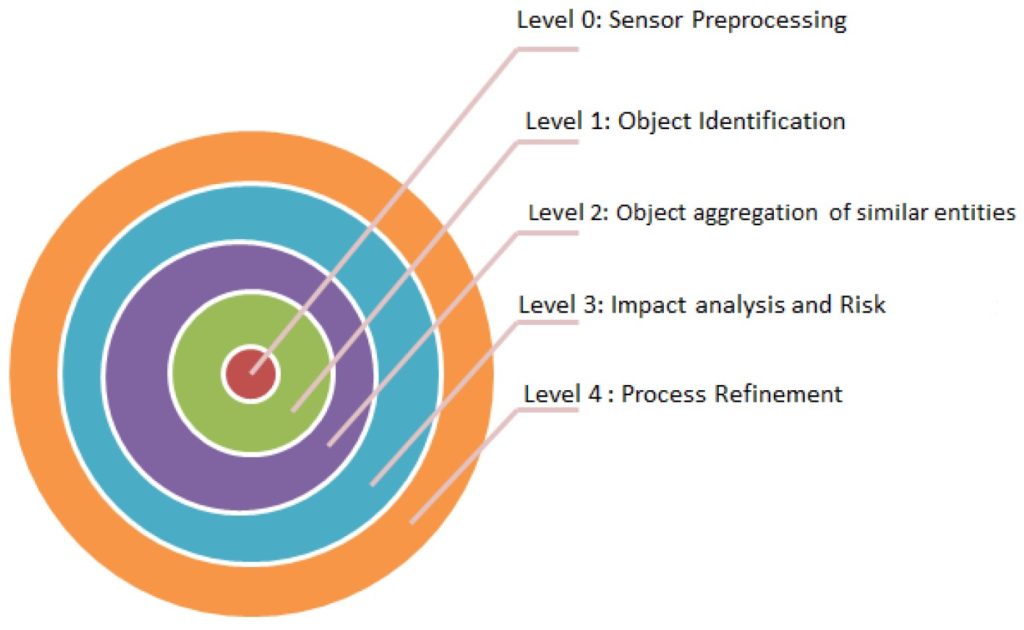

In addition to the various sensor source classifications, there are six commonly recognized “levels” of sensor fusion. Data and sensor fusion has been part of military systems for several decades. The U.S. Department of Defense Joint Directors of Laboratories (JDL) Data Fusion Subgroup developed one of the most important data fusion models. The JDL model incorporates five levels for fusion methodologies, including:

Level 0 — source preprocessing is the lowest level of data fusion. It includes signal conditioning and fusion at the signal level. In the case of optical sensors, it can include fusion at the level of individual pixels. The goal of preprocessing is to reduce the quantity of data while maintaining all of the useful information needed by the higher levels.

Level 1 — object refinement uses the preprocessed data from the previous level to perform spatio-temporal alignment, correlations, association, clustering or grouping techniques, state estimation, the removal of false positives, identity fusion, and the combining of features that were extracted from images. Object refinement results in object classification and identification (also called object discrimination). The output is produced in consistent data formats that can be used for situation assessment.

Level 2 — situation assessment establishes relationships between the classified and identified objects. Relationships include proximity, trajectories, and communications activities and are used to determine the significance of the objects in relation to the environment. Activities at this level include the prioritization of significant activities, events, and any overall patterns. The output is a set of high-level inferences that can be used for impact assessment.

Level 3 — impact assessment evaluates the relative impacts of the detected activities in level 2 to support a situation analysis. In addition, a future projection is made to identify possible near-term vulnerabilities, risks, and operational opportunities. The future projection includes an evaluation of the threat or risk and a prediction of the anticipated outcome.

Level 4 — process refinement is used to improve Levels 0 to 3 and to support sensor and general resource management. Initially, this was a manual task to achieve efficient resource management while accounting for task priorities, scheduling, and controlling available resources. While the goals have not changed, modern systems increasingly supplement manual analysis with AI and ML tools.

Sensor fusion architectures for the IoT

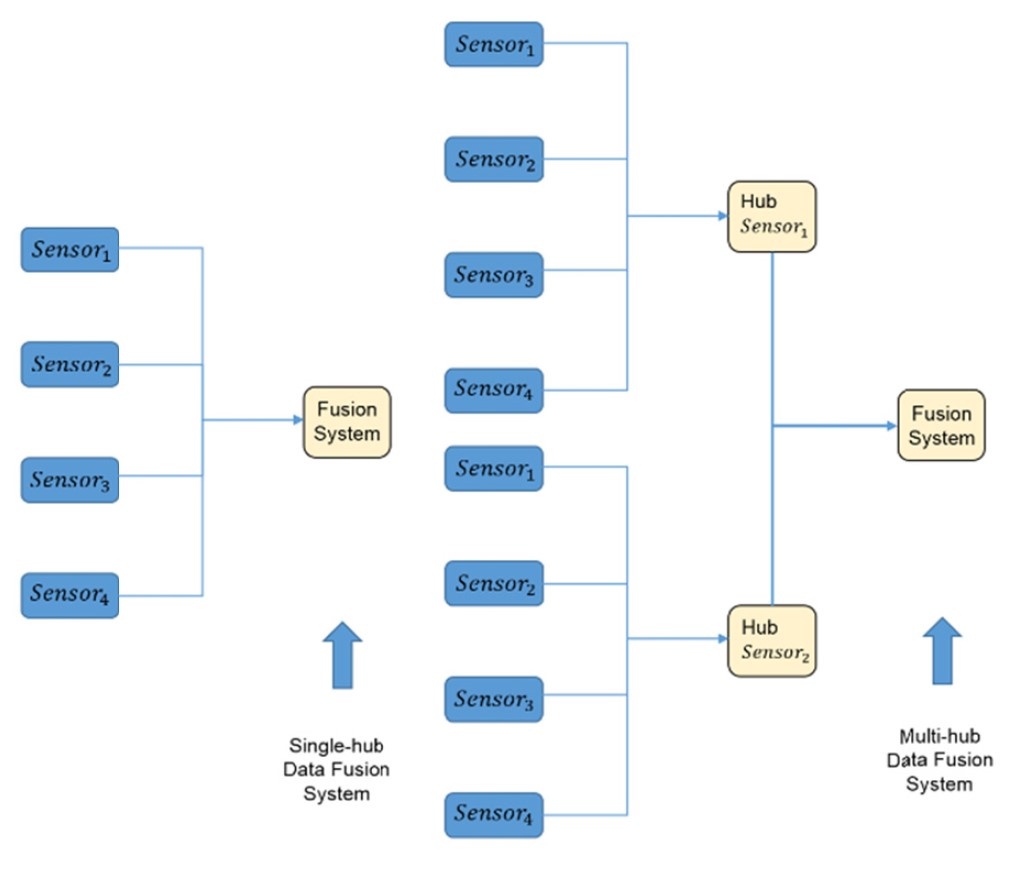

As discussed above, sensor fusion occurs at multiple levels, including the sensor, feature, and decision levels. In the case of the IoT, sensor fusion can also be classified by how it travels through the wireless sensor network. In a single-hop architecture, every sensor transmits data directly to the data fusion hub. A star network architecture is an example of a single-hop implementation. In a multi-hop architecture, data from one sensor passes through adjacent sensor nodes on the way to the data fusion hub. A mesh network is one form of a multi-hop structure.

The multi-hop architecture has several advantages. It can support scalability since additional sensors placed on the outer edges of the network can still transmit data to the data fusion hub without incurring an energy penalty of long transmission distances. Employing progressive data fusion at each hop minimizes energy requirements and spreads the energy need across the network. And it reduces energy consumption by predetermining and minimizing the needed transmission energy using channel state information and other inputs.

Summary

Sensor fusion is a form of data fusion and uses similar methodologies. There are multiple ways to categorize the levels of sensor fusion. There are several ways to classify sensor interactions in a given system, including complementary, competitive (or redundant), and cooperative. Multi-modal fusion approaches can address weaknesses in individual sensors and improve the quality and accuracy of the resulting information. Wireless IoT networks present unique challenges and opportunities to maximize the architecture of sensor fusion implementations to maximize energy efficiency.

References

A Review of Data Fusion Techniques, Hindawi

A Survey of Internet-of-Things: Future Vision, Architecture, Challenges and Services, IEEE

An Overview of IoT Sensor Data Processing, Fusion, and Analysis Techniques, MDPI

Data Fusion and IoT for Smart Ubiquitous Environments, IEEE

Multi-Modal Fusion for Objective Assessment of Cognitive Workload, IEEE

Sensors and Data Acquisition, Science Direct