by Charles Pao, senior marketing specialist, CEVA

Motion sensing devices are all around us and in several of the electronics that we use daily. Motion sensors are in earbuds – noticing taps to change songs or pausing music when we take them out of our ears. They’re used for head tracking in virtual reality (VR) and augmented reality (AR) headsets used in gaming and training. Motion sensors enable gaming remotes (for orientation), consumer-grade robots (for heading), and your phone (also for orientation). Their sensing powered by inertial measuring units (IMUs) – sensors found in many commonly used consumer electronics, like the ones listed prior. And their capabilities are unlocked by sensor fusion. In this article we’ll explore what sensor fusion is and what it can do.

What’s an IMU sensor?

Before we get into sensor fusion, a quick review of the Inertial Measurement Unit (IMU) seems pertinent. An IMU is a sensor typically composed of an accelerometer and gyroscope, and sometimes additionally a magnetometer. By looking at data from these sensors, a device is able to get a more complete picture of its orientation and motion state.

- The accelerometer measures acceleration (change of velocity) in a single direction, like the force you feel when you step on the gas in your car. At rest, an accelerometer measures the force of gravity.

- Gyroscopes measure the angular velocity about its three axes. It outputs its rotational yaw, pitch, and roll at any given moment.

- A magnetometer, quite simply, measures magnetic fields. With proper calibration in a stable magnetic field, it can detect fluctuations in Earth’s magnetic field. Through those fluctuations, it finds the vector towards Earth’s magnetic North, giving it an absolute heading.

The sensor’s information is then used to maintain a drone’s balance, improve the heading of a home robot vacuum cleaner, change the orientation of a smartphone screen, and other motion-related applications.

How IMUs are used in sensor fusion

Now that we understand what makes up an IMU, how does it relate to sensor fusion and why do we care? Well, sensors alone are not that “smart”. They generate raw data. But this raw data must be processed and packaged to become actionable.

Sensors in an IMU are similar to specialist doctors reading over your patient file – they all have opinions, and their specializations give them insights others don’t have, but it’s up to you to process their opinions to make your final decision. For instance, if the accelerometer is suggesting that gravity is changing from pointing downward to a more horizontal angle, but the gyroscope is showing that there’s almost no motion, which do you trust? Well, in this scenario, the gyroscope should be trusted more as it’s not influenced by outside forces. Since the gyroscope tells us that the user frame hasn’t changed, it’s safe to say that the device is accelerating consistently, like a car driving straight.

In another scenario, if the gyroscope is showing a small, consistent angular velocity, but the accelerometer and the magnetometer show that the device is at rest, then you may trust the opinion of the two “doctors” that agree. And then you can infer that there’s some gyroscope bias that’s giving a false output.

These examples aim to show how sensor fusion is essential to understanding what the best output is based on the fusion of its sensors’ information. This can be used to determine accurate motion, orientation, and heading information.

Exploring the possibilities

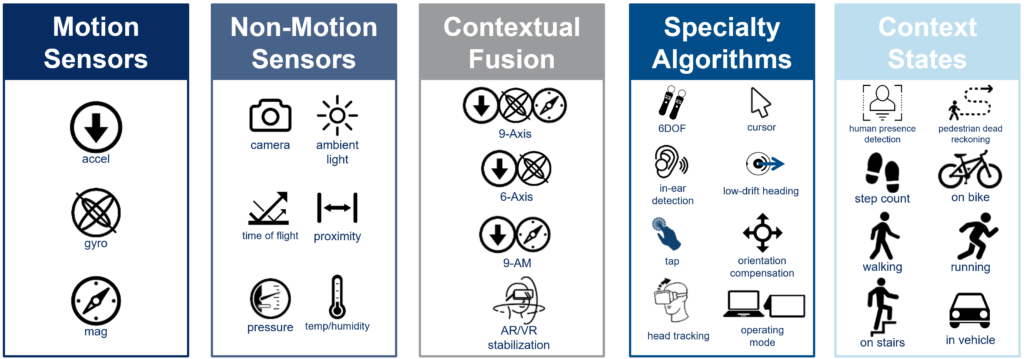

When combined with sensor fusion software, IMUs can be used not only for more accurate motion, orientation, and heading, but also for specialized functionality. Thoughtful fusion of IMU data can create a smooth XR experience with predictive head tracking that minimizes latency effects. For wireless presenting or TV remotes, sensor fusion can directly translate the 3D controller motion into intuitive 2D motion on-screen. The combination of accelerometer and gyroscope sensors can also detect complex in-air shapes and gestures. In human navigation, analysis of the data from the accelerometer and gyroscope, sensor fusion can estimate the direction and distance someone has traveled on foot.

Sensor fusion doesn’t have to be done solely with the IMU, but it does often start with it. In the XR space, fusion of controller orientation with linear position from an external camera can create an effective inside-out 6-DOF system. For robot navigation, fusion of an IMU with optical flow and wheel encoder data creates an accurate and robust dead reckoning. If there’s motion involved, sensor fusion likely can help.

Sensor characterization and calibration

Another piece of sensor fusion is ensuring that the sensors are calibrated appropriately, as

IMU sensors are strongly affected by their calibration. Sensor characterization is the process of taking measurements from a sensor under controlled conditions. These measurements can be used to fine-tune how the sensor reacts to various temperatures, operating modes, and motion. Once a sensor has been properly characterized, sensor fusion can help ensure its performance is optimized.

The sensor characterization process

In order to properly characterize a sensor, a statistically significant number of sensors need to be placed on some sort of board that allows communication to change modes and log data. This board should then be placed inside a controlled environment. As an example, they could sit on a two-axis gimbal motor that allows it to move through all three axes of movement. By putting this contraption inside a temperature chamber, we can iterate through permutations of varying temperatures, positions, and operating modes. With knowledge on how each high accuracy motor moved, the temperature changes, and what modes were run, we can acquire a large amount of sensor information to characterize the sensor. To characterize magnetometers, the board can be placed in a Helmholtz Coil to generate a controlled magnetic field.

In order to test how these sensors perform over their lifetime, the sensors can also undergo an artificial aging process by exposing them to extreme conditions of heat and humidity. Then, the same tests can be run with the aged sensors to collect updated data.

A sensor model can be created with all this comprehensive data, leading to a model of a typical (nominal) sensor and – subsequently – the optimization of its performance.

By understanding how the sensor behaves, sensor bias in the accelerometer and gyroscope can also be adjusted for. These sensor biases are related to what the sensors see while at rest. If this sounds familiar, it’s because it’s the second idea discussed in the IMU/doctor analogy section. Adjusting for these may seem as simple as an offset, but these biases can change with temperature, and show different behavior for the same sensor from the same lot. This bias error is significant, trumping most other errors outside of scale error. However, with the proper sensor fusion algorithms, this calibration can be done dynamically while the device is in use.

Putting the pieces together

Using sensors properly requires multiple layers of understanding. There needs to be an understanding of how the base sensors work, how to fuse the data from these sensors to create meaningful information, how to create specialized functionality based on application, and sensor characterization to truly optimize performance.This process is complicated to say the least.

Simplifying it using a large and robust library of sensor fusion functionality can deliver consistent, accurate results. A sensor-agnostic sensor processing software system with comprehensive sensor characterization tools, such as CEVA’s MotionEngine solution, can deliver sensor intelligence for a myriad of electronic devices. Combined with a variety of inertial and environmental sensors, this type of software can unlock new capabilities for a broad range of motion applications and deliver the best possible experience for the end-user with significantly less time and energy.

Related Resources

Hillcrest Labs MotionEngine

About the Author

Charles Pao started at Hillcrest Labs (acquired by CEVA) after graduating from Johns Hopkins University with a Master of Science degree in electrical engineering. He started work in software development, creating a black box system for evaluating motion characteristics. Currently, he is Hillcrest’s first point of contact for information and support and manages their marketing efforts. He’s also held various account and project management roles. Charles also earned Bachelor of Science degrees in electrical engineering and computer engineering from Johns Hopkins University.